The term MDM may often have a negative echo as it can be understood as a yet another system to buy or as a very expensive and complex process to be implemented without clear tangible value or measurable ROI (Return on Investment). Both of those interpretations could not be more wrong if you really understand what you are doing.

When talking about MDM, the first thing to understand is that MDM is not only a system – it’s a combination of roles, responsibilities and processes enabled by technology. Or how I like to think of it, MDM is a way of thinking and seeing the data management in a big picture.

Secondly, it’s true that the value of MDM is hard to prove but it does not mean that there is no value at all. To understand better the concept of MDM you can read our previous blog post Master Data Management explained. But now, lets dig deeper into the four drivers for managing your data.

1. MDM reduces costs by optimizing and automating data management processes

One of the key features of master data is that it’s used in several systems all around the organization either for operational or analytical purposes. But how is the data managed in those systems? Is it created manually or is it integrated from somewhere else?

Every time a person creates a new data record or updates an existing record it takes time. Time is money. If a new customer record is needed for five different systems, e.g. marketing, prospects, CRM, financial, data warehouse, there is a huge difference in the time it takes and costs it generates if the update is done manually in each system or once in one system that is integrated with the others. Also if the creation or update process is very complex, e.g. if approval or data enrichment is required from different persons, it takes more time, which again generates more costs.

The example in the table 1 below highlights two different scenarios for master data maintenance (incl. creation, update, deletion) costs. In the first scenario the data maintenance is done only in one central system using a simplified process. The maintained data is automatically exported to other systems that need the same data through automatic integrations.

In the second scenario there aren’t any automatic integrations so the data maintenance is done separately in all systems that need the data. Being operational systems, data maintenance often requires more or less complex processes. The time used for data maintenance includes the time required from all persons that take part in the process (e.g. creator, acceptor). The cost of the time is based on the calculation where the employee costs about 50€ per hour for the employer including salary and other costs.

|

SCENARIO 1:

Data maintenance to one system, optimized process, automated data integrations |

SCENARIO 2:

Data maintenance to several systems, complex processes, manual data integrations |

| Amount of systems where data maintenance is needed |

1 |

10 |

| Time used for data maintenance |

5 min |

10 min |

| Cost of the time |

4,17 € |

8,33 € |

| Amount of data maintenance transactions in a year |

1000 |

1000 |

| Price in total in a year |

4 170 € |

83 300 € |

Table 1. Two scenarios for master data maintenance costs.

The example above does not consider the expenses caused by the licensing costs and development costs when building the MDM capability and integrations to support the automated data integrations.

However, the example clearly shows that by minimizing the time you need for creating or updating a data record and the amount of manual work you need for the whole data management operation you can save a huge amount of money in the long run.

And imagine what other valuable work the employees could be doing if he or she didn’t have to spend time on complex data maintenance tasks in multiple systems.

On the other hand, complex data management tasks may also affect people’s mindset about the data maintaining itself. If all data maintenance tasks are really complicated, people tend to avoid them which may end up poor data quality. Easy and intuitive processes mean happy employees who can concentrate on their actual work rather than spending time on data maintenance.

2. MDM reduces costs by decreasing the friction in the operative processes

One of the clearest indicators of poor master data management is bad data quality. If the data quality is bad, it tends to cause errors in operative processes such as sales, manufacturing, shipping, etc. If for example the same customer record is saved in several systems, do you know which system has the latest and most accurate information about the customer? If the data is managed separately in each system, the operative processes may utilize different data.

As an example, a customer company has bought a product and requested direct delivery to one of its plants. The delivery address is saved to the system where the sales is handled. However, the shipping is done based on the data in the ERP system where the supply chain management is handled. If the customer data is not in sync between the sales and ERP systems there is a risk that the delivery address is incorrect in ERP. The product can be accidently sent to the customer’s headquarters instead of the plant they requested the delivery in the first place.

This kind of error obviously generates extra costs. First, the bad end result of the process needs to be fixed, e.g. the wrong delivery is re-delivered to the correct address. Second, the bad data needs to be fixed in order to avoid the error to happen again. Let’s say that fixing the error costs about 500 € including the time the employees use, re-delivering the product, compensation for the client, etc. If this is a one time thing, it’s not a big issue and the cost can be handled. But if the root cause is not fixed, it is most likely that the error will be repeated. If similar errors occur, let’s say 100 times a year, the costs are already 50 000 €.

In addition, to the direct costs mentioned above, there are also indirect costs which are often misinterpreted. For example, wrong deliveries may affect the customer’s attitude so that the next time they need something, they will turn to some other provider who can handle the delivery to the correct address. Additionally, bad experiences are often shared and in the worst case scenario also other customers or prospects may begin to doubt the trustworthy of the company and turn to competitors. At the end of the day, this may have a major impact on the sales and income.

By managing the master data, refining the data management processes and utilizing suitable technologies to support them, you can decrease the amount of errors, i.e. friction, in the operative processes caused by data issues.

For example, if the customer master data is managed only in one system and then automatically integrated to other systems utilizing that data, you can be sure that the data is in sync between the systems.

3. MDM enhances decision making by providing better visibility on high-quality data

Nowadays, business decisions are usually based on data. It can be public data which is available from news, publications, statistics, social media, etc. or it can be analysis made from operational processes (sales, deliveries, invoicing, etc.).

When talking about decisions it’s very important that one can trust the data on which the decisions are based. And more important, one should be able to connect the data to other important data domains in order see the bigger picture. The most important domains to be connected with are usually master data. For example, what do you do with sales data if you can not connect it to a customer, a product or a certain area, e.g. country?

When master data management is handled poorly, even some simple reports can be difficult to build reliably. For example, if you want to have a report including all customers, does you know in which systems the customer data is stored. And how do you know in which system the data is most reliable? Again, if you want to have a report to see which products or product types the customers have bought, are you able to combine different data domains reliably to each other with some key information. Or do you have visibility on the life cycle and validity of customer or product or other master data? Can you actually trust the data?

It’s true that in traditional data warehouse you can pretty much combine any data that is wanted as long as you find the correlation between the data objects. You can also build simple automatic match & merge logic based on decisions which sources are more trustworthy than others. However, data warehouse is used mainly for analytical purposes and it doesn’t take into account the data management aspect and the actual user who creates, updates or validates the data. Data warehouse does not either fix the data quality.

What if you could focus the power to fix the root problem instead of fixing the symptoms, and actually enhance and simplify the processes related to creating and updating the data?

With MDM you can harness the data management to enhance the quality of the data. The master data creation and update processes can be simplified so that it’s easy and pleasant to keep the master data updated. Depending on the architectural approach there still can be various sources for the master data if it’s the best fit for the business process but nevertheless the management is optimized and supports the business processes and end user experience. The overlapping processes are removed and it’s clear who updates what data and in which system. This leads to a situation where data quality issues can be pointed out and fixed already at the root and there is no need for extinguishing small fires which would jeopardize the data quality used for decision making.

Many organizations are also thinking about overtaking big data, data science and artificial intelligence (AI) projects in order to enhance their business and getting competitive advantage. However, every data science and AI projects (as well as traditional analytics projects) are only as good as the used data and the true value comes only when one is able to connect the data to master data domains. If the data is poor quality and connections to master data are not reliable, the results and the achieved advantage are poor as well.

With MDM one can create a rock solid foundation for the business critical data which enhances all the data ventures and thus enables decision making, value creation and even new business opportunities by providing better visibility on the business through data.

The actual value what MDM brings to the decision making is very hard to calculate as the benefits come indirectly depending on how well the organization uses the MDM ecosystem. The more the valuable high-quality data is used the more benefit is gotten. For example, by offering valuable master data for machine learning purposes one can save money by optimizing maintenance processes based on the data got from the production. Or one can find a cross-selling opportunities for existing customers and create a whole new business.

The decisions made are as good as the data. Hence, with high-quality master data one can enable high-quality decisions. The actual benefits can be from tens of thousands of euros to millions.

4. MDM helps to comply with regulations by creating a solid foundation for data management

The fourth aspect for the value of MDM is the requirements that different regulations set to data and data management. Such regulations are for example General Data Protection Regulation (GDPR) in EU and International Financial Reporting Standards (IFRS). If organizations fail to comply by those requirements, they end up paying millions of euros in fines.

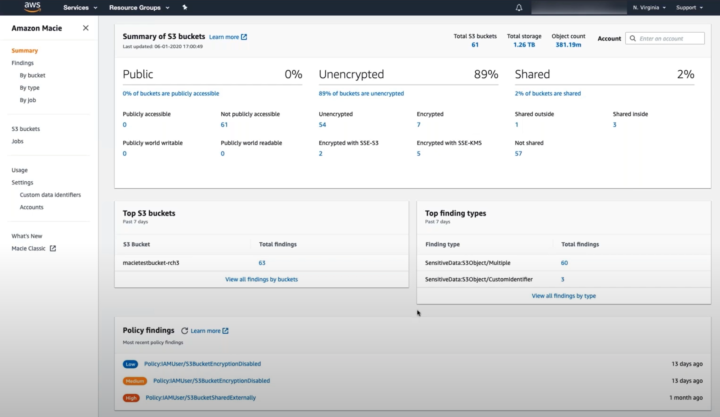

As an example, the key requirements that GDPR sets for personal data processing are transparent data processing, limitations based on purpose, data subject rights, consent for data processing, notifications for breaches, privacy by design, data protection impact assessment, data transfer protection, assigning a data protection officer, and increasing the awareness and training of GDPR among the employees [1]. Hence, organizations need to have strict control of the technologies and processes that they use to collect, manage and share information about their customers, employees, suppliers and other parties that they do business with [2].

MDM helps organizations to achieve the control on the data processing required by regulations such GDPR. When MDM is implemented in the core of data management it’s clear who has access to the master data, how it can be modified, where it flows, what’s the lifecycle of the data, etc. In addition, with MDM system one can connect separate data silos into one centralized repository providing full visibility on the master data, e.g. customer or employee data. Also, the technical requirements such as privacy by design can be implemented directly to modern MDM tools to support regulations.

Even though MDM can not fully fulfill all the requirements that different regulations, such as GDPR, sets to data processing, it creates a strong basis for data management and can significantly make it easier to achieve the correct state to fulfill the regulations.

Hence, MDM saves time and money when one doesn’t have to start over every time when new regulations are set. And it can potentially save millions of euros by avoiding the huge fines for non-compliance.

Conclusions

Above we went through four different approaches why MDM is important. Of course, when thinking of the value and especially the ROI in a big picture one also needs to consider the amount of investment that is put for example into the licensing costs and development costs when building the MDM capability and integrations to support the automated data integrations.

As with many data related projects, also in MDM projects the major costs are usually realized in the very beginning of the MDM journey. Most costs are related to setting up the MDM capability, building the integrations, handling the change management, and so on. The benefits and savings however are coming over time, once the master data is under control. This means that trying to calculate a short time ROI is difficult. However, looking a bit further, using a longer time frame, let’s say 3-5 years, should be enough to get a reasonable ROI in any master data project.

Even though MDM has clear advantages, one should still remember that large scale MDM is not for everyone. Especially small organizations can handle their master data without launching separate MDM projects. However, when the organization gets bigger and the architecture and ecosystem gets more complex one needs to start looking into how the data is actually managed. Otherwise, one will end up running the business with poor data and inefficient processes and will surely get behind in the race of digitalization.

By correctly deploying continuous MDM capability one can create a supporting ecosystem, including people, processes and technologies, which enables the data initiatives by creating the structure, visibility and endurance to the data management.

References

[1] Bhatia, P. 2019. A summary of 10 key GDPR requirements. Advisera Expert Solutions Ltd. Available: https://advisera.com/eugdpracademy/knowledgebase/a-summary-of-10-key-gdpr-requirements/

[2] Assefa, B. 2018. Master Data Management for Achieving GDPR Compliance. DATAVERSITY Education, LLC. Available: https://www.dataversity.net/master-data-management-achieving-gdpr-compliance/#