Your AI partner can make or break you!

Large investments in AI clearly indicate industries have embraced the value of AI. Such a high AI adoption rate has induced a severe lack of talented data scientists, data engineers and machine learning engineers. Moreover, with the availability of alternative options, high paying jobs and numerous benefits, it is clearly an employee’s market.

Market has a plethora of AI consulting companies ready to fill in the role of AI partners with leading industries. Among such companies, on one end are the traditional IT services companies, who have evolved to provide AI services and on the other end are the AI start-up companies who have backgrounds from academia with a research focus striving to deliver the top specialists to industries.

Considering that a company is willing to venture into AI with an AI partner. In this blog I shall enumerate what are the essentials that one can look for before deciding to pick their preferred AI partner.

AI knowledge and experience: AI is evolving fast with new technologies developed by both industries and academia. Use cases in AI also span multiple areas within a single company. Most cases usually fall in following domains: Computer vision, Computer audition, Natural language processing, Interpersonally intelligent machines, routing, and motion and robotics. It is natural to look for AI partners with specialists in the above areas.

It is worth remembering that most AI use cases do not require AI specialists or super specialists and generalists with wide AI experience could well handle the cases.

Also specialising in AI alone does not suffice to successfully bring the case to production. The art of handling industrial AI use cases is not trivial and novice AI specialists and those that are freshly out of University need oversight. Hence companies have to be careful with such AI specialists with only academic experience or little industrial experience.

Domain experience: Many AI techniques are applicable across cases in multiple domains. Hence it is not always necessary to seek such consultants with domain expertise and often it is an overkill with additional expert costs. Additionally, too much domain knowledge can also restrict our thinking in some ways. However, there are exceptions when domain knowledge might be helpful, especially when limited data are available.

A domain agnostic AI consultant can create and deliver AI models in multiple domains in collaboration with company domain experts.

Thus making them available for such projects would be important for the company.

Problem solving approach This is probably the most important attribute when evaluating an AI partner. Company cases can be categorised in one of the following silo’s:

- Open sea: Though uncommon, it is possible to see few such scenarios, when the companies are at an early stage of their AI strategy. This is attractive for many AI consultants who have the freedom to carve out an AI strategy and succeeding steps to boost the AI capabilities for their clients. With such freedom comes great responsibility and AI partners for such scenarios must be carefully chosen who have a long standing position within the industry as a trusted partner.

- Straits: This is most common when the use case is at least coarsely defined and suitable ML technologies are to be chosen and take the AI use case to production. Such cases often don’t need high grade AI researchers/scientists but any generalist data scientist and engineer with the experience of working in an agile way can be a perfect match.

- Stormy seas: This is possibly the hardest case, where the use case is not clearly defined and also no ready solution is available. The use case definition is easy to be defined with data and AI strategists, but research and development of new technologies requires AI specialists/scientists. Hence special emphasis should be focused on checking the presence of such specialists. It is worth noting that AI specialists availability alone does not guarantee that there is a guaranteed conversion to production.

Data security: Data is the fuel for growth for many companies. It is quite natural that companies are extremely careful with safeguarding the data and their use. Thus when choosing an AI partner it is important to look and ask for data security measures that are currently practised with the AI partner candidate organisation. In my experience it is quite common that AI specialists do not have data security training. If the company does not emphasise on ethics and security the data is most likely stored by partners all over the internet, (i.e. personal dropbox and onedrive accounts) including their private laptops.

Data platform skills: AI technologies are usually built on data. It is quite common that companies have multiple databases and do not have a clear data strategy. AI partners with inbuilt experience in data engineering shall go well, else a separate partner would be needed.

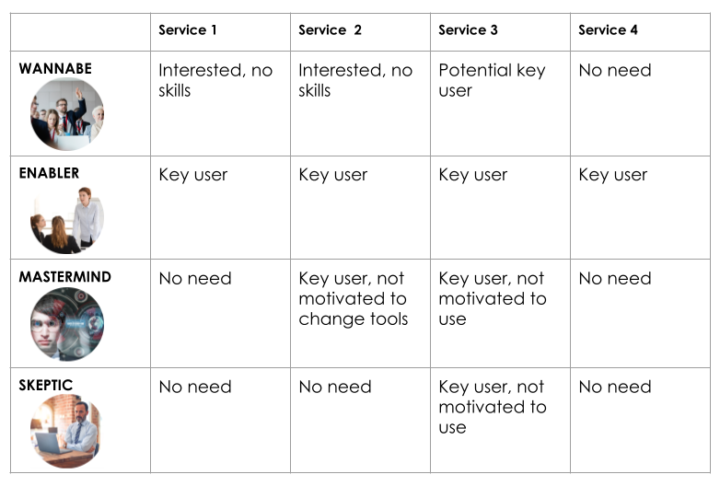

Design thinking: Design thinking is rarely considered a priority expertise when it comes to AI partnering and development. However this is probably the hidden gem beyond every successful deployment of AI use case. AI design thinking adopts a human centric approach, where the user is at the centre of the entire development process and her/his wishes are the most important. The adoption of the AI products would significantly increase when the users problems are accounted for, including AI ethics.

Blowed marketing: Usually AI partner marketing slides boast about successful AI projects. Companies must be careful interpreting this number, as often major portions of these projects are just proof of concepts which have not seen the light of day for various reasons. Companies should ask for the percentage of those projects that have entered into production or at least entered a minimum viable product stage.

Above we highlight a few points that one must look for in an AI partner, however what is important over all the above is the market perception of the candidate partner, and as a buyer you believe there is a culture fit, they understand your values, terms of cooperation, and their ability to co-define the value proposition of the AI case. Also AI consultants should stand up for their choices and not shy away from pointing to the infeasibility and lack of technologies/data to achieve desired goals set for AI use cases fearing the collapse of their sales.

Finding the right partner is not that difficult, if you wish to understand Solita’s position on the above pointers and looking for an AI partner don’t hesitate to contact us.

Author: Karthik Sindhya, PhD, AI strategist, Data Science, AI & Analytics,

Tel. +358 40 5020418, karthik.sindhya@solita.fi