Critical look at AWS Well-Architected – Analytics Lens from a smaller project perspective

AWS Well-Architected Framework is a set of questions and practices for creating good architectures. This May, AWS released Analytics Lens for AWS Well-Architected Framework, which focuses in analytical projects. This Lens is closest to my area of expertise and therefore it is good time to write blog about it. As for my background, I am usually working in Nordic projects which generally has less data and smaller team sizes than the projects that AWS architects base most of their viewpoints in the document on.

This blog is a commentary to the Analytics Lens document and will be highlighting things that I disagree with or strongly agree. Many of the disagreements are related to AWS having quite large projects as a reference versus in relatively small projects that are common in the Nordics. For example, AWS is selling Glue in many cases where it is too heavy for the data amount and even Lambda function could do the necessary transformations with much smaller costs. On the other hand, in the document there are many good points with which I agree, such as using columnar formats in S3.

First there will be small introduction to AWS Well-Architected Framework and Analytics Lense, but after that headers will follow the structure of the original Analytics Lens document. But the blog should be understandable without prior knowledge of Well-Architected Framework. Some experience with AWS services and analytical concepts like data lakes would be good to have.

AWS Well-Architected Framework

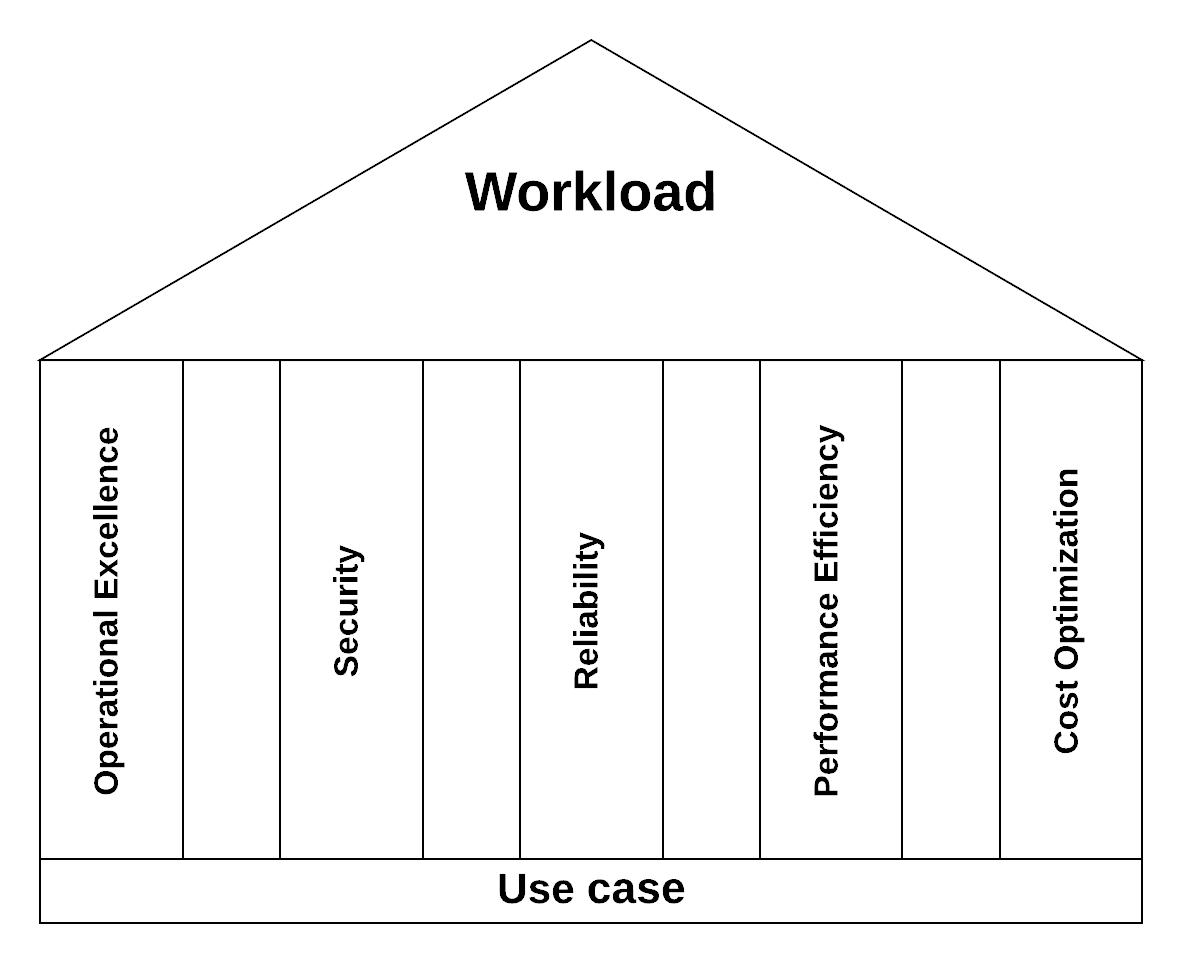

Let’s start with explaining what AWS Well-Architected Framework itself is. It is a distilled version of the combined knowledge of AWS Architects on what should be taken into account when creating well architected systems. In practice, the framework is a collection of non-AWS specific questions and AWS best practices that can answer to those questions. The questions are grouped into five different pillars: Operational Excellence, Security, Reliability, Performance Efficiency and Cost Optimization.

Analytics Lens

Analytics lens is a focused look into the generic well-architected frameworks questions and best practices for data projects. The document consists of two parts: First half is about different use cases and best practices on how to solve them. Second half is about the questions to focus on especially in analytics cases. Not all topics will be discussed in this blog as I will be picking some of the most important parts in my opinion and commenting on them. Headers Definition – Catalog and Search Layer and Scenarios are from the first half and the Pillars are from the second half.

The document is intended for people with technology roles and I concur with that, but it doesn’t require much technical AWS knowledge. The proposed technologies are explained at least on the high level in the document.

Overall, I agree with the material in the document, but I will be critically commenting from smaller projects point of view. With smaller projects, I mean projects where largest tables are hundreds of millions, but not billions. And team size responsible for everything is four persons and not multiple teams with more people in each.

For the rest of the blog before Recap and Closing remark, the headers will be following the original Analytics Lens documents headers.

Definitions – Catalog and Search Layer

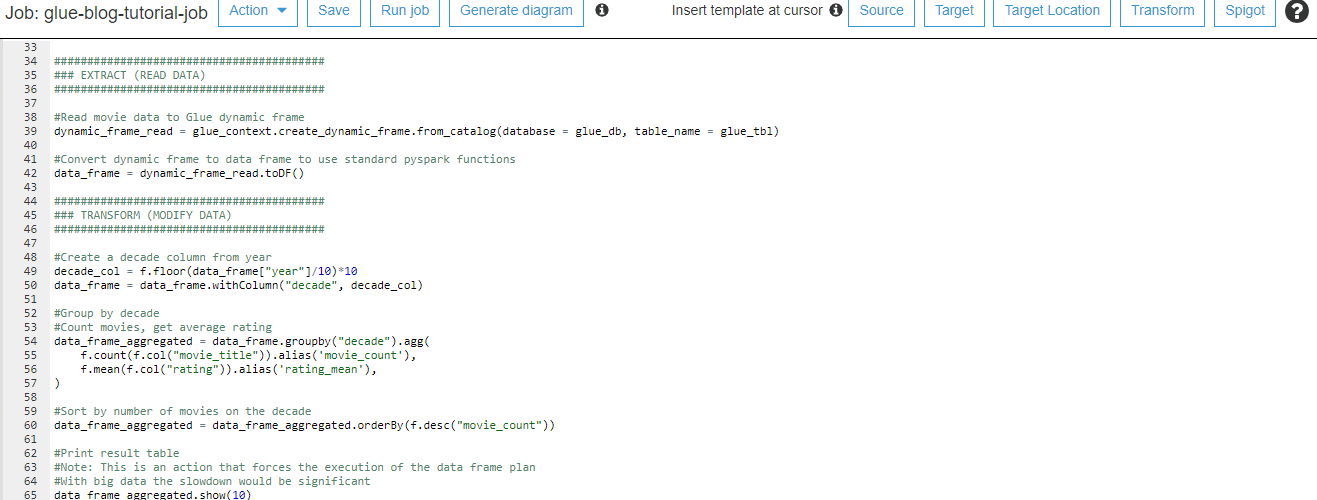

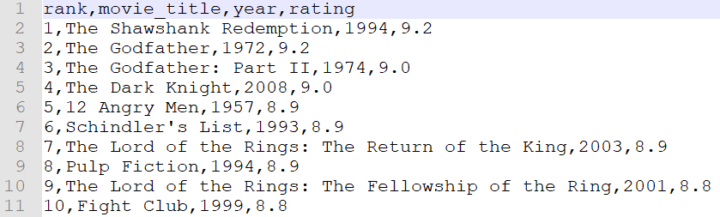

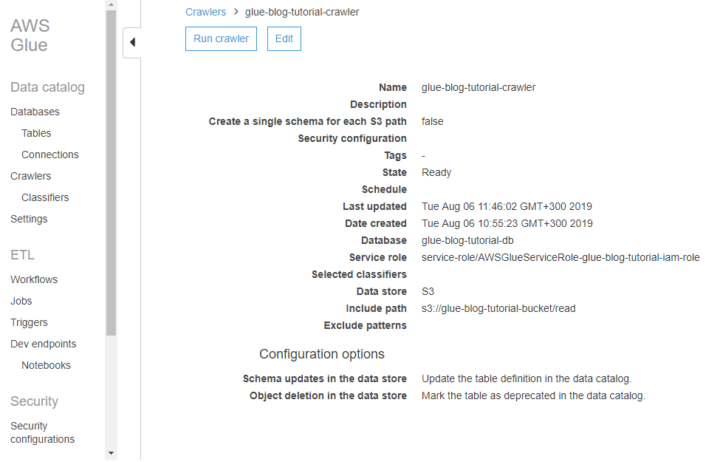

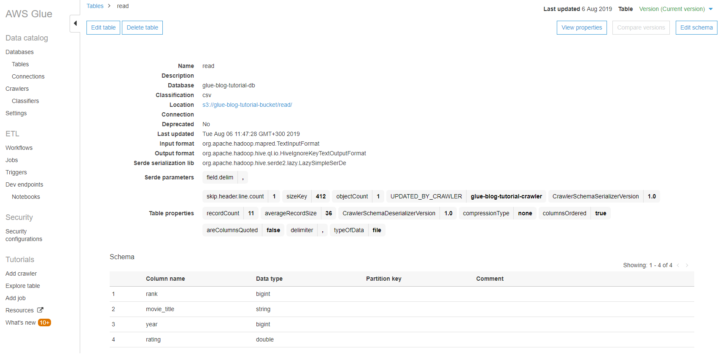

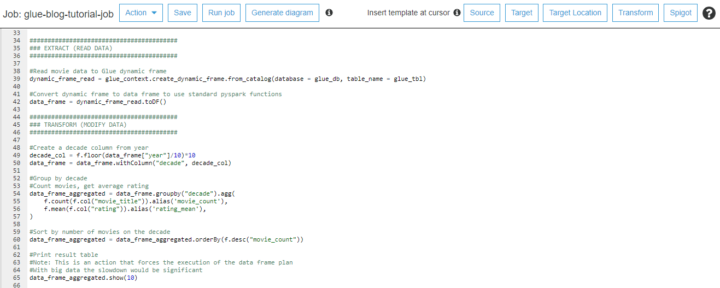

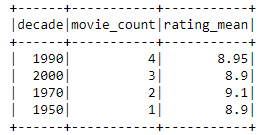

AWS Glue is marketed as being “…easy for customers to prepare and load their data…” and it does have wizard for creating jobs and it manages Spark-clusters for you. But, if you try to do anything more complex than mapping fields to different names, you need to change the Spark-code, which might not be easy for all developers. Also, if you have network routing requirements for connecting to the source database or have limited S3 access, then you need to define which network Glue is running and need to remember security group ingress for other cluster nodes.

Scenarios

Data Lake

Data Lake is defined as a centralized repository for storing all structured and unstructured data at any scale.

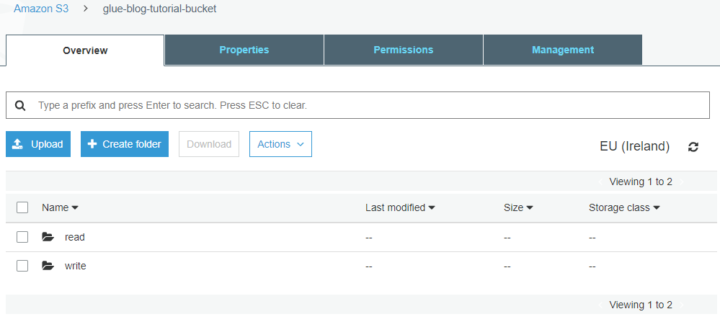

From this scenario, I want to highlight that there needs to be a process of cataloging and securing the data. But with naming conventions you can already do this to some extent. Which ties into a part that I don’t agree with which is that data providers are only provided location (S3 bucket) and everything else is decided by the provider. By providing a bit more guidelines, you are making the data lake teams work much easier and this can also help in consuming the data. And it should not take much extra work from the provider side when this practise is decided in the beginning.

Batch Data Processing

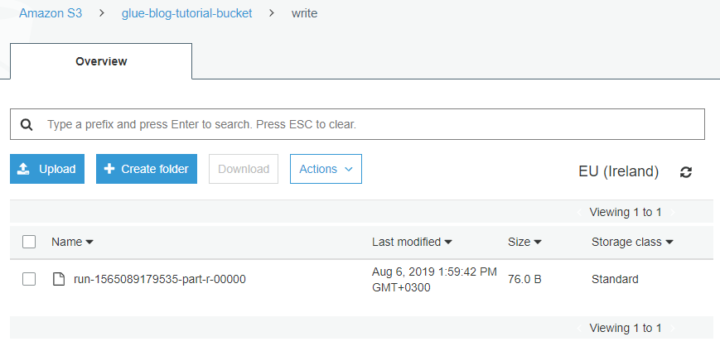

In Batch Data Processing scenario, only EMR, Glue and Batch are mentioned. Why not Fargate? EMR and Batch first launch EC2 instances. In Batch Docker containers are then run on top of the instances and EMR creates a compute cluster. Glue is serverless in that sense, but it also has quite long startup time and cost. The startup time should be decreasing in the near future, but I haven’t heard about that they would decrease cost or the minimum billable time, which is now ten minutes. Fargate launches relatively fast and the cost isn’t very high when used for batch processing. The caveat here is of course what is the complexity of the compute logic and amount of data. It feels like AWS architects have again gone with the large dataset option only.

AWS Step Functions are marketed as visual workflows when in truth it has only the visual representation of the workflow written as YAML. For many technical people this might be better than drag-and-drop UI, but I don’t like it being marketed as a visual tool when in truth it has only the visualization of the end result.

Streaming Ingest and Stream Processing

Authors rise a good point that you should plan a robust infrastructure that can adapt to changes on the volume of data coming through the stream. Unfortunately, Kinesis doesn’t provide this yet out-of-box and you need to create it yourself with Application Auto Scaling or something else. On the other hand, Firehose does provide this functionality with limitations in other areas.

Kinesis Data Streams not having resource based policies for cross-account sharing is written as a positive thing. And, to an extent it is, as you can’t by mistake grant access to the stream. But, if you want to send data to the stream, this means that the sender needs to have a user in the target account or having a role switching rights. The latter doesn’t always work with third party tools, which are waiting for access credentials.

This is a good place for an example of a case where missing the small details in pricing can lead to ten times the estimated cost. The Kinesis Producer Library (KPL) is very useful as it combines records you are planning to send to the maximum record size. With Kinesis itself it isn’t so much of an issue unless you are having issues with record limits per shard, but Firehose is another issue. Firehose is billed in 5KB steps rounded up. Hence, if each record is 1KB in size, you are paying five times the amount that you estimated from the daily data amounts. KPL fixes this as each record is filled as full as possible. But if you are using Firehose transformations, then you need to have intermediate Kinesis before Firehose as only then Firehose understands that a single Kinesis record has multiple records to be transformed.

Kinesis Client Library (KCL) should be used for two reasons. It can parse the data combined with KPL and it takes care of the shard location. Just remember that KCL will create a DynamoDB table to keep track of shard location. The cost is quite minimum and the IAM access isn’t very large, but something to keep in mind.

The Kinesis aggregation library are also available separately: https://github.com/awslabs/kinesis-aggregation. KPL is not available in all environments (Java wrapper for C++ executable), so aggregation library is necessary for example when using Lambda functions.

Multitenant architecture

The lens correctly says that users should have only just enough privileges to access their data and not the other tenants. But this is easier said than done in true multitenant mode because writing good IAM policies is not the easiest thing to do. And, generally the promise of public cloud to analysts and other users has been the freedom of doing what they want without many guardrails. Also, the billing can be a hassle especially if there are costly data transfers. Therefore, I generally recommend separate accounts for different teams. The baseline is then that no access is given and all teams are responsible for their own costs. Of course, there can and should be shared resources, for example audit backups, but those are maintained with completely different teams and don’t have access to the source accounts.

Operational Excellence Pillar

ANALYTICS_OPS 05: How are you evolving your data and analytics workload while minimizing the impact of change?

AWS Secrets Manager mentioned again as a place to store credentials and other secrets. After the service was launched, there hasn’t been much mentions that Parameter Store can also store secrets encrypted by KMS and having the same security level as with Secrets Manager. There are probably two main reasons: one is positive from customer point of view and the other is more about getting more money to AWS. The positive one is that Secrets Manager has a lot more features than Parameter Store especially when using with RDS. The AWS billing side is that Parameter Store is free except KMS invocations where Secrets Manager costs per secrets and API calls. But it seems that the pricing has lowered quite a bit, it is only 40 cents per secret per month.

Security Pillar

ANALYTICS_SEC 2: How do you authorize access to the analytics services within your organization?

I like the terminology of “fine-grained” and “coarse-grained” approach to user segmentation. “Fine-grained” links to the fully shared multitenant architecture where all resources are shared and access control is made in quite low level. The “course-grained” is used in silo multitenant architecture, where almost everything is done in accounts owned by the team and access needs to be granted by cross-account roles or resource policies. AWS prefers the course-grained version for organization with large number of users, but for me this should also be taken into account when working with small autonomous teams, even if the user amount itself isn’t very large. You can lose a lot of time when trying to setup the correct fine-grained accesses and even then might have missed something and created a security hole, or the requirements have changed and you need to make changes.

ANALYTICS_SEC 5: How are you securing data in transit?

Just a small highlight that generally data is SSL/TLS encrypted when you are using AWS services, but Redshift is an anomaly here. You need to separate define SSL in jdbc configuration and if possible also block non SSL-traffic.

ANALYTICS_SEC 6: How are you protecting sensitive data within your organization?

S3 object tagging is told to be a good way of marking what is sensitive data. After that you can write IAM policies with conditions to limit access. You also should look into disabling tagging access, because otherwise users could grant additional access to themselves.

I disagree with this idea for multiple reasons, but lets start on positive note on what I agree with. Tagging makes it possible to define fine-grained access policies and also generally have more metadata on what the data is like. But in many cases this can hide information, increase questions on why S3 commands failed, increase maintenance requirements and introduce security fails. I would like to have the data sensitivity be part of the S3 path. For example store-db/generic/post-number, store-db/company/products, store-db/PII/customers. This way the sensitivity information can be easily found and IAM access can be given using prefixes and wildcards. Of course this approach requires that you have a robust pipeline so that you can trust that data goes into correct prefixes and in addition check the stored data also from time to time. Having data in correct prefixes might need modifications of the raw data, and in that case raw data should be treated as being the highest level of sensitivity possible.

Why would AWS then want to market the S3 object tagging? One reason is probably what I also said about fine grained access, but another is that they have introduced Macie service couple of years back which does the tagging for you. This takes away some of the cons I raised, but of course it increases costs to you as a user and I don’t have experience how well it finds Nordic Personally Identifiable Information(PII).

Performance Efficiency Pillar

Very good point here of using business and application requirements to define performance and cost optimization goals. AWS gives lots of possibilities on how to store data, but unfortunately customers don’t always know what the requirements are and architecting the best solution is difficult.

In on-prem world you generally had one place to store data and when it was nearing its limits, old data was just deleted (or more disk space added). Now that you can have very cheap storage in Glazier, customers think that everything should be saved, even though there should still exist a systematic data life-cycle thinking, at least from legal point of view (GDPR etc).

ANALYTICS_PERF 01: How do you select file formats and compression to store your data?

A very large thumbs up for columnar formats if you are using or having even a slight feeling that you might want to use Athena, Spectrum or for example Snowflake external tables. Columnar formats aren’t really required if you are just dumping the data in S3 before loading it into a data warehouse. But even in this case you should compress your data and split it in an optimal way for the target database.

Cost Optimization Pillar

ANALYTICS_COST 04: What is your data lifecycle plan?

This ties smoothly with my comments of the performance pillar. Lifecycle should be taken into account early in the project, because from business perspective it might take some time to get the information. Technical usage pattern is of course another option, but you might not have visibility to that for some time, because users are not using your system in a normal way yet. I haven’t tested S3 analytics, but it shows like a good possibility for finding out the usage patterns. Other ways could be S3 logs or cloudtrail if they are enabled.

ANALYTICS_COST 07: How are you managing data transfer costs in your analytics application?

This is an area that is often overlooked. Most who work in AWS know that data transfer cost into AWS is free and costs when transferred out. Which makes perfect sense in AWS plan to get customers to migrate both data and compute to their cloud. Between regions transfer cost also makes quite a lot of sense because the data goes to a remote location.

But at least I feel that not everyone knows that there are data transfer costs between availability zones. The cost is a lot smaller than between regions or internet, but it can still pile up. Generally best practice is to have resources split into multiple AZs for high availability (HA). The cost isn’t really that high that you shouldn’t split across AZs if you have HA requirements, but if the architecture isn’t really HA and some parts are only in one AZ, then the situation is different. For example, you want to pay only for one database, but thought to put Lambda network configuration to launch in any AZ. In this situation if the AZ where the database is down, the system wouldn’t work in any case and now you also pay extra for the lambdas requesting information from other AZs during normal process.

ANALYTICS_COST 08: What is your cost allocation strategy for resources consumed by your analytics application?

We are again back in the question of how to setup multi-tenant architectures. Two different styles are introduced in the document: siloed and shared. In siloed style, each team (for me also environment) have separate accounts and in shared everyone is in the same account. In both cases, there can be some supporting accounts separate from the development. When using shared architectures, allocating cost center tags to resources is quite necessary. But even that won’t be enough, if you want to truly share resources and their costs. In this case for example a RDS database isn’t just for the one cost center. And then you need to have additional metrics for calculating how much of the database everyone has used.

On the other hand, if you are using a siloed architecture with AWS Organizations then you have clear view on how much each team and environment has been using resources. AWS highlights that there is added complexity of managing users and resources, but I don’t really buy it. To me, the downside is the underusage of the resources, but that should be able to be minimized with good metrics and using services instead of EC2 machines with custom installations.

Recap

The Analytics Lens is quite informative piece of document and I agree with most of the points. What I don’t agree, generally fall into three categories: 1. Written only with larger organizations in mind, 2. large supporting teams or 3. trying to just sell more AWS services. None of them are inherently bad and you should always take this kind of best practises documentation with a grain of salt. As you should also take mine. I am only one person even though my viewpoint has been affected by my customer projects, colleagues and AWS own material.

I hope that this was an informative look at Analytics Lense and can detour into other important aspects of data intensive applications in AWS. My recommendation is to read the document yourself and come to your own conclusions.

Closing words

In AWS Summit EMEA, Werner Vogel (Amazon.com CTO) mentioned the Well-Architected Framework in his keynote. He emphasized that they want customers to build the best systems they can in AWS and Well-Architected Framework is one tool to help this.

I also strongly recommend that you do Well-Architected review to your solutions and workloads with or without Analytics Lens recommendations. You can do it yourself or ask for a more experienced third party to facilitate. Solita is one of AWS Well-Architected Partners, so we are at your service also in this area. If you have any questions about this, send me a message.

Additional reading

- Landing page for AWS Well-Architected Framework: https://aws.amazon.com/architecture/well-architected/

- AWS blog post for Analytics Lens: https://aws.amazon.com/blogs/big-data/build-an-aws-well-architected-environment-with-the-analytics-lens/

- Analytics Lens document: https://d1.awsstatic.com/whitepapers/architecture/wellarchitected-Analytics-Lens.pdf