Recapping AI related risks to Organizations

With uncertainty, the natural thing is to divest, i.e. not invest large sums in an uncertain endeavour. But AI risks are not easily disposed of in small projects either.

This might leave organizations perplexed as to what to do. On one hand, there is the call to embrace AI. On the other, the risks are real.

As a rule of thumb, a longer time perspective won’t hurt. Predictive modeling and automation are long-running investments. As such, they should be subject to risk assessment and scrutiny. There should be management for their entire life span.

Because of AI solutions’ partly speculative nature, their risk of failure is relatively high. A recent study underlined this, suggesting that roughly four out of five AI projects fail in the real world.

A predictive model has its particular strengths and weaknesses. But it has some recurring costs too, both implicit and explicit. Some of these costs may fall immediately to the supporting organization. And some of them might even fall outside of it.

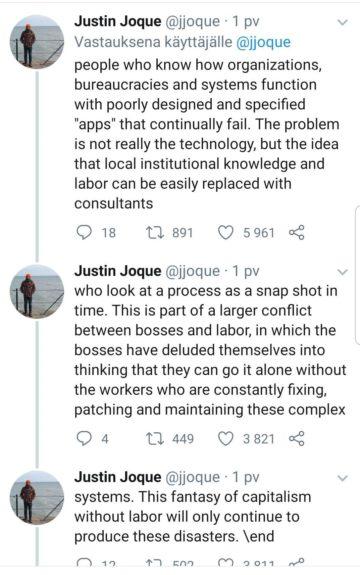

The following (otherwise unrelated) tweet from a couple of days back pinpoints these risks neatly.

Leaving aside the social discourse, I very much agree on observations about organizations. There is a certain mindset about DS magically fixing business perspectives and organizational shortcomings. In my personal opinion, this is naïve at best. It is not an overstatement to call it dangerous in some cases.

The use of automation requires a certain robustness from surrounding structures.

AI as part of larger systems

In classical control theory, systems are designed around the principle of stability. A continuously working system, like a production line, is regulated with the help of measured and desired outputs. The problem is to make processes optimal by making them smooth, and get a good output per used resource ratio out of it.

Often, AI is a part of a larger production machinery. The whole process may involve human beings and other machine actors as well. Recent examples of AI victory make a lot of sense when seen in this kind of framing.

If we look at a famous example, Google AlphaGo’s victory over human players was supported by human maintained tournament protocols, servers, and arrangements. Not to speak of news media that helped to sculpt the event when it took place.

The AI’s job was relatively simple as comes to inputs and outputs: receive a board position and suggest the next move. Also how that AI learned to play Go in the first place was a result of multiple years of engineering. Its training was enabled by human work, and its progress was assessed by humans along the way.

The case of adverse outcomes

If we look at organizations, there are always hidden costs when adapting new procedures and processes. Predictive model performance, on the other hand, is largely measured by the number of explicit mistakes that it makes. These kinds of explicit mistakes may capture part of the cost of an automated solution. But fail rate is hardly a comprehensive measure in a complex setting.

Just like in a game some moves may be very costly as regards winning, some mistakes may be very costly to an organization.

One recent observation within the field has been about implicit “ghost” work that goes into keeping up AI appearances: fixing and hiding AI based errors, even fixing AI decisions in the first place before they have time to cause harm.

Now traditional production lines have fallback mechanisms. For example for turning the line off in a case of emergency. Emergency protocols are in place because unexpected events may occur in the real world. This is a very healthy mindset for any AI development also. We should embrace it fully. An organization should take these things into account when planning and assessing a new solution.

No matter how good preliminary results a solution should show, it will start failing sooner or later when something unexpected happens. And it will not fix itself. Its use will probably also create unexpected side effects even when it is doing a superb job.