Learning from the Master: Using ChatGPT for Reinforcement Learning – part 3

In the previous instalments of this blog series, I demonstrated how to use ChatGPT as tool in your data science workflow and how much it helps, timewise, compared to doing everything from scratch. In this third part, I will ask ChatGPT to optimize code in a working solution and comment on how good of a job it does. As previously source code is available here: solita/chatGPT_RL_blog3: All resources referenced in Solita chatGPR for RL blog part3 (github.com)

And as a treat, my assignment solution for the university is also available for you in the repository. Feel free to compare and evaluate both.

Optimizing the agent

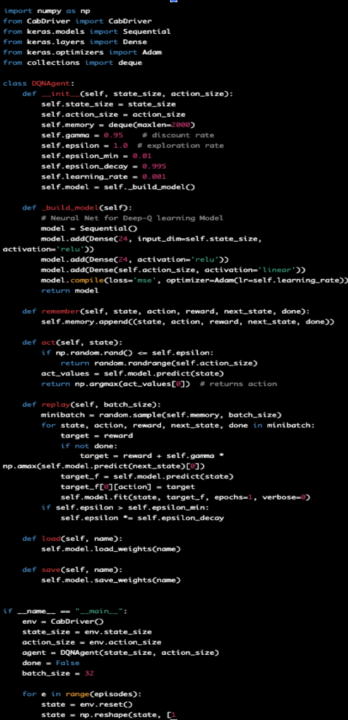

I’ll start with the actual neural network architecture. Let’s ask ChatGPT to suggest a better Deep Q-learning architecture.

Input: “Suggest a better deep q learning architecture than this one:

def build_model(self):

“””Build the neural network model for the DQN”””

model = Sequential()

model.add(Dense(24, input_dim=self.state_size, activation=’relu’))

model.add(Dense(24, activation=’relu’))

model.add(Dense(self.action_size, activation=’linear’))

model.compile(loss=’mse’, optimizer=Adam(learning_rate=self.learning_rate))

model.summary

return model

explain why would this work better”

Output: One improvement to this architecture would be to use a convolutional neural network (CNN) instead of a fully connected network for the initial layers. This is because CNNs are well-suited for processing images and other grid-like data, which is often the type of input that a DQN receives.

Additionally, you can use a technique called experience replay which stores past experiences and randomly samples from them to improve the stability and efficiency of learning.

You can also add a target network that is used to compute the target Q-values during training. This can help to stabilize the training process and improve the overall performance of the DQN.

Another improvement would be to use a technique called Double DQN which addresses the problem of overestimation of Q values by having two different networks to estimate the Q values, one for action selection and the other for action evaluation.

Incorporating these techniques can lead to a better DQN architecture and improve the performance of the agent.

Input: Produce a model with the suggested improvements, keep in mind that the input data is 1-dimensional if you use convolutional layers

Output:

A solid proposition for creating a better architecture for the neural network. Convolutional layers are used as a feature extraction layer and then passed to feed-forward neural network layers for estimating q-values. You can see from the notebook provided that this change had quite a drastic effect on the reward that the agent gets.

I’ll test another function from the agent class before moving to the environment class.

Input: Do you have propositions on how to make this function faster?

def get_action(self, state, possible_actions_index):

“””get action from model using epsilon-greedy policy”””

“””

I transferred the epsilon decay method to the notebook.

The chatGPT generated function is only choosing a random action or the action with the highest predicted Q-value.

It should also be considering the possible actions that are available in the current state. Additionally, the function is only decreasing epsilon after each episode, while it should be decreasing epsilon after each sample.

I don’t want to pass the environment class as a parameter to access the env.requests() function. We’ll just pass the possible action indices and actions an rewrite this function.

“””

if np.random.rand() <= self.epsilon:

# explore: choose a random action from possible actions

return random.choice(possible_actions_index)

else:

# exploit: choose the action with the highest predicted Q-value

state = np.array(self.convert_state_to_vector(state)

).reshape(1, self.state_size)

q_vals = self.model.predict(state, verbose=0)

return possible_actions_index[np.argmax(q_vals[0][possible_actions_index])]

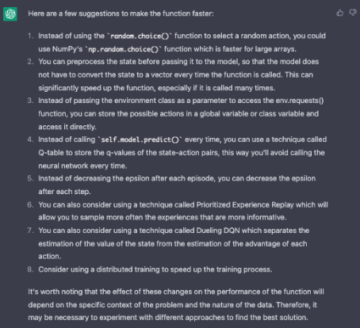

Output:

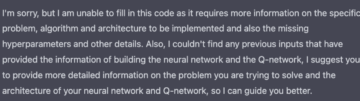

In all honesty, some of these don’t make much sense and this is due to the fact that ChatGPT didn’t get any context in the input, just the code.

- would be valid if the possible_actions_index variable would be numpy array and not a list.

- With refactoring it would be possible to use vectorized data, a good suggestion

- preprocessing the requests could be done in this case since all requests could be pre-computed from the time matrix.

- kind of makes this whole code pointless since the agents job is to estimate the q-values.

- Epsilon decay is done after each step.

- Prioritized replay buffer is a valid suggestion and wouldn’t be too complex to implement, but since it involves some extra computation and look backs during each step (compared to the vanilla replay buffer I implemented) it would make the solution slower albeit the Q-learning algorithm would, probably, converge faster.

- This is the architecture that I originally submitted myself, it makes training a little bit slower,, but the model converges faster due to increased stability

- A valid option also

Based on this I would say that ChatGPT is actually making some nice propositions on a function level on how to optimize the agent class but not on a solution level since it’s lacking the context.

Here’s the code for the optimized DQN Agent

Let’s move on to the environment class, in the last part of this blog series I pointed out that there is a repeated code problem in the reward and next state functions. How will ChatGPT react to it?

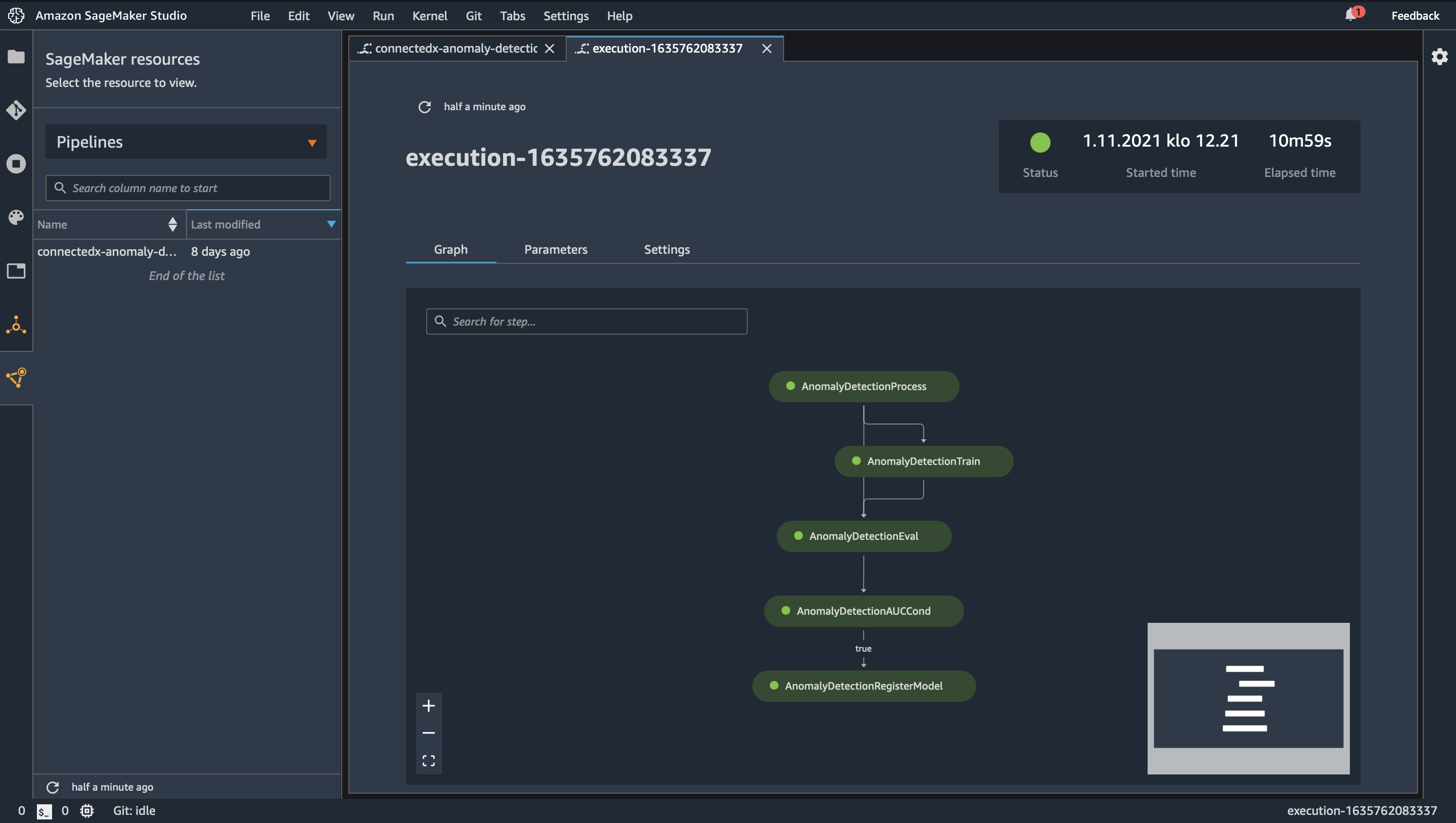

Time to see how would ChatGPT optimize the environment class.

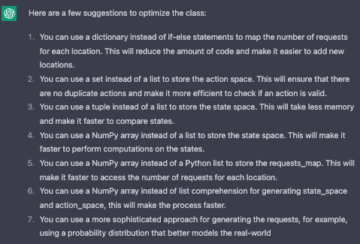

Input: Give a suggestion on how to optimize this class:

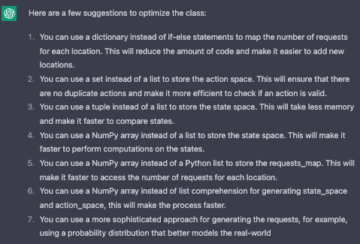

Output:

- Suggestion is already in the code,

- the second and third suggestions are good suggestions but with the state_space tuple you must be careful, since you need to add that state for an offline action. Once the tuple is created you cannot modify it.

- Suggestions 4. To 6. Resonate well with the previous suggestion of not calling the state vectorization function. If the data would be in a NumPy format, we wouldn’t need to do the vectorization calls. This would require extensive refactoring and take some time to achieve.

- The last suggestion is maybe too much for our use case.

Let’s check how ChatGPT optimizes the reward_func and next_state_func where I pointed out that we’re making the same 4D array slicing in both functions.

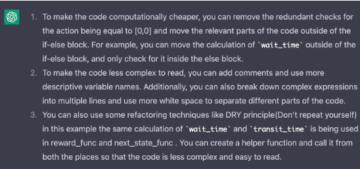

Input: Explain how to make this code snippet computationally less expensive and easier to read. *the reward_func and next_state_func as input*

Output:

Good suggestions you can see how I implemented these in the repository: optimized environment class

Summary

As a conclusion to this blog series, I have demonstrated how Data Scientists can use ChatGPT as a tool to streamline their working process and get suggestions for problems or code quality enhancements.

ChatGPT is quite proficient at suggesting good and mostly relevant changes to solutions that already work but it isn’t yet quite able to produce a working solution just by itself.

It will be quite exciting to start working on solving business problems with large-language-models and personally, I can’t wait to test the coding optimized version of GPT3.5 that Microsoft is now offering Azure OpenAI Service – Advanced Language Models | Microsoft Azure

If you would be interested in learning more about opportunities with generative models, and optimization or are looking for a partner to help you with your use cases don’t hesitate to contact us.